A new horizon

Since 30 years the supercomputers have been perpetrating a curious rule. Every ten years have multiplied their speed by the thousand. Additionally, the new horizons (from megaflops to gigaflops, from gigaflops to teraflops and from there to petaflops) have been accomplished in the years finished in 8. This will be the first time this unwritten rule stops being fulfilled: is impossible that for 2018 we still have machines with exaflops capacity.

The new computer will inaugurate the new era of the exaflop, the measurement unit that measures one followed by 18 zeros of operations by the second. Researchers that study extreme meteorological phenomena can start rubbing their hands together, such as the current heat wave that is causing havoc in Spain, general climate change, biomedicine, vehicle designers and scientifics of the big data. The military and energetic administration will also participate, through their respective agencies, in the development of the american project.

The impact on the study of new medicines is one of the most evident. «Easy drugs, that wild grass that heals, have been already found». Now, practically all the compounds that go out to the market have come out from a computer», illustrates Modesto Orozco, scientist at Instituto de Investigación Biomédica de Barcelona. «The pharmaceutical companies store in their chemical libraries millions of molecules that are not easy to analyse experimentally without big computers. They have to be tested one by one, also in combination with others. We can’t use a billion of mice «, says Orozco. According to some estimations, from the actual 100.000 molecule tests it will be increased to a thousand million analysis a year. » Medicine will be becoming more and more like an engineering. New machines, bigger, will have a «transversal» impact, primarily on complex diseases, such as cancer, [for analysing] the synergic effect of drugs». Is not only about analysing a medicine or its combination, but also how reacts in a particular way each patient according their genomic profile. «We search personalized therapies. We want to know why there’s drugs that are good for a 90% of the population but very damaging on a 5%. Those drugs could not make it to the market because would not overcome the approval of the medicine’s agencies, very conservative, but that would not be the case if we had the possibility to simulate in detail how it affects in concrete each person according to their profile».

For Orozco, Obama’s announcement «is like when Kennedy said that we had to make it to the Moon. Build the machine is the headline, but what transcends is the effort necessary to make it». The biomedicine researcher is also the Director of the Life Sciences Area at Centro Nacional de Supercomputación (BSC-CNS), that allocates the only supercomputer Spain has: the MareNostrum. Is 15.000 times less potent than the projected according to a pattern, the Linpack program, that is executed in every big computer to measure their speed in equal conditions. Even so, the MareNostrum is an essential resource for 3.000 projects of research centers, universities and companies such as Repsol Iberdrola.

Their Director, Mateo Valero, also puts in place the announcement. «The most important is not the exaflop but that for the first time United States wants three of its agencies to work together (Defence, Energy and the National Foundation for Sciences), with its big companies and universities. ‘A country that doesn’t compute doesn’t compete’, they say».

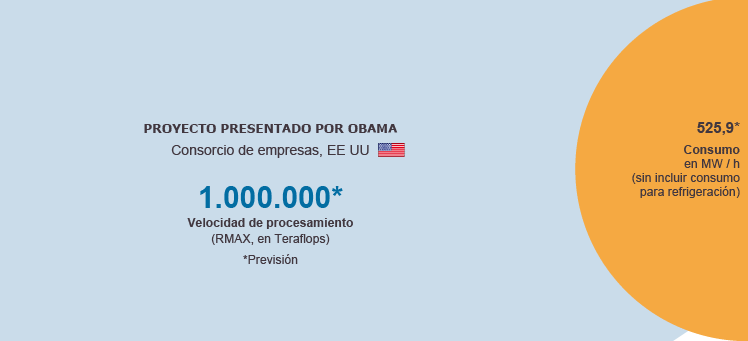

None of the experts consulted dare to offer a date before 2022 or 2025 for the start up of such a device. Its cost will be unveiled in a few months. As a reference, a project already functioning, CORAL, pretends to build three supercomputers with a capacity of 150 petaflops each, for a 525 millon dollars total. The current project would be six times more potent.

The processors are reaching to the physical limits of miniaturization. If you want to get this power of calculation, the only solution is to include more and more processors in the supercomputer. The supercomputer Thiane-2 includes 6 million of these processors- a personal computer requires only one- against the 1000 millions the new one would need. «The technological challenge is big, says Valero, «a task must be divided between 100 millions for it to be executed». The hardware and the current programs are not enough, nor is the human element. «None company by itself today can make them». Nor the programmers education. For this reason United States has gathered all its strengths, from universities to administration and enterprise.

It is not the only difficulty the project face. The new supercomputer will be able to provide with levels of detail and reliability without precedent the future of the climate on Earth, and well ahead on time: by the end of his century. This aim can result paradoxical: it will need a lot of energy to function, a consume model which is not very sustainable for the environment. Its little brother, MareNostrum, consumes 1 megawatt a year, 1,5 megawatts if the energy that requires to cool its processors is taken into account. Apart from the environmental cost there is the economic: 1,5 millions of euros on the electricity bill is supposed. The new supercomputer, with the current technology, 2would need more than 500 megawatts to work, without counting the necessary to cool it down», ventures the responsible of the MareNostrum. to draw a comparison: 500 megawatts is half of the energy produced by a spanish nuclear center a year. Medicine will become more like engineering»

Modesto Orozco (IRB Barcelona)

The era of the green computing has advanced the one of the exaflop, more efficient processors in energy consuming. Researchers work in reducing this cost to 50, even 20 megawatts a year. An annual project, also in EE.UU, the supercomputer Summit, will use only a 10% more energy that the giant Titan, the second fastest supercomputer in the world right now, but will multiply from five to ten times its capacity.

The good news for the environment, that adds to the climate change reseracher’s hopes with the announced machine. Friederike Otto, from Oxford University, coordinates climateprediction.net an enormous super computing project for the study of climate change. «We want to simulate extreme meteorological phenomena, but they need so much calculation capacity that no current supercomputer could address them by itself», he points out. In the absence of it, they request use time in multiple computers distributed in the whole world, but even that way they don’t get the desired calculation power. He is hopeful with the project, just like his colleague Francisco Doblas, professor at ICREA and director of the Department for Earth Sciences of the BSC.

«The biggest difference once the new supercomputer is used will be the spacial resolution with which we will be able to carry out our simulations by the end of the XXI century, but not only, also so that we can predict phenomena such as El Niño from now till the end of the year», points out Doblas. It is meant to obtain the robot portrait of the climate on the Earth. And, following with the analogy with a photography, the current simulations of that future image of the Earth have pixels of 50 kilometers width. «In 2025, with the new supercomputers, that resolution could reach only one kilometer», points out the researcher. To foresee how El Niño will evolve until December these researchers need that a computer such as MareNostrum, with 40.000 processors, work 24 hours a day a whole week..

We are starting to understand how the dynamics of the ocean work, the ice of the poles and other systems, but we now need to combine their data so that we know how the influence each other».

Francisco Doblas (BSC-CNS)

The film of the evolution of the global climate from here to the end of this century requires, logically, even more calculation time. Employing 2.000 processors of MareNostrum at complete time we would need six months of calculations, exemplifies Doblas. Achieving simulating how a heat wave evolves such as the current one, an extreme meteorological phenomenon, would need to run a 10.000 simulations at 1 kilometer of resolution, something «unthinkable» right now.

The same happens with the combination of millions of molecules and with the genetic profiles of patients, in the prevision of the global climate change we have to take into account many variables. «We start to understand how the dynamics of the oceans work, the ice of the poles and other systems, but we now need to combine that data in order to know how they influence each other and also how does the climate change work at a smaller scale, on concrete areas in the world», explains Doblas.

The objective is to confirm the theories: «We want to understand the biophysical processes with the climate, soil use, interaction with oceanic systems, with the aerosols that are deposited on the surface of the sea, the evolution of the marine ice around the Antarctic», he puts as example. For Doblas, the new computer will generate a film that will reflect the future, and will have «more pixels, more characters and more colours» than he and his colleagues are able to create currently.

Use time

Contrary to Unites States, in Europe the costs of use of a supercomputer mainly fall on the organism that gestate them, always the purpose being of public research. In the case of private companies it is charged, apart from the electricity consumed, the labour costs of the computer’s operators and, in any case, the equipments amortization.

The projects are selected by a technical committee and other scientific that, in function of the project’s interest, concede the use of hours on the computer.

Source: El País.com